Rationalist check-list:

- Incorrect use of analogy? Check.

- Pseudoscientific nonsense used to make your point seem more profound? Check.

- Tortured use of probability estimates? Check.

- Over-long description of a point that could just have easily been made in 1 sentence? Check.

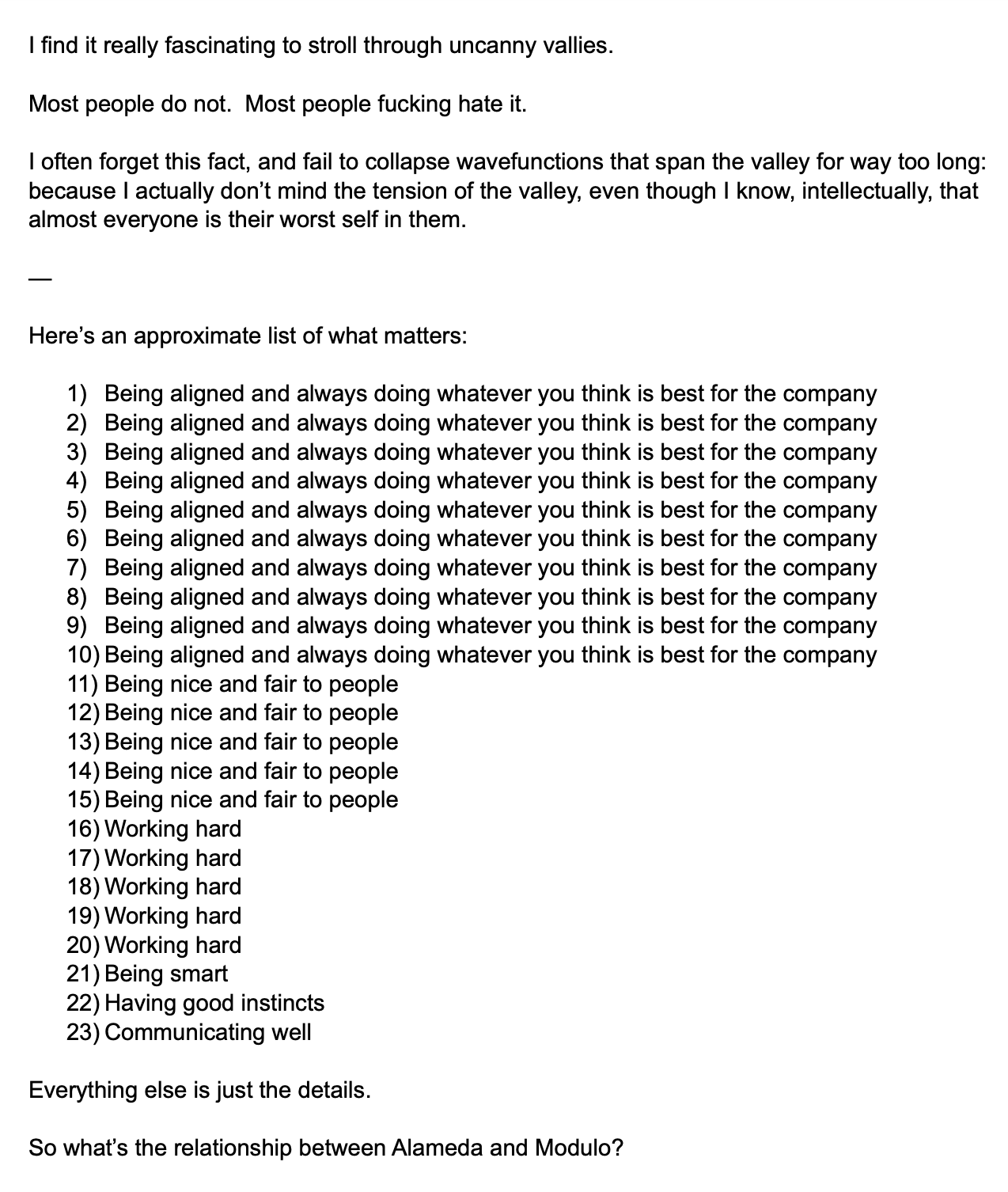

This email by SBF is basically one big malapropism.

This reads very, uh, addled. I guess collapsing the wavefunction means agreeing on stuff? And the uncanny valley is when the vibes are off because people are at each others throats? Is ‘being aligned’ like having attained spiritual enlightenment by way of Adderall?

Apparently the context is that he wanted the investment firms under ftx (Alameda and Modulo) to completely coordinate, despite being run by different ex girlfriends at the time (most normal EA workplace), which I guess paints Elis’ comment about Chinese harem rules of dating in a new light.

edit: i think the ‘being aligned’ thing is them invoking the ‘great minds think alike’ adage as absolute truth, i.e. since we both have the High IQ feat you should be agreeing with me, after all we share the same privileged access to absolute truth. That we aren’t must mean you are unaligned/need to be further cleansed of thetans.

They have to agree, it’s mathematically proven by Aumann’s Agreement Theorem!

Unhinged is another suitable adjective.

It’s noteworthy that how the operations plan seems to boil down to “follow you guts” and “trust the vibes”, above “Communicating Well” or even “fact-based” and “discussion-based problem solving”. It’s all very don’t think about it, let’s all be friends and serve the company like obedient drones.

This reliance on instincts, or the esthetics of relying on instincts, is a disturbing aspect of Rats in general.

- me, doing an SBF impression. digital media. 2023Well they are the most rational people on the planet. Bayesian thinking suggests that their own gut feelings are more likely to be correct than not, obviously.

the amazing bit here is that SBF’s degree is in physics, he knows the real meanings of the terms he’s using for bad LW analogies

I love the notion that the tension between the two was “created out of thin air,” and the solution is to drag both parties into it and make them say “either I agree with you or I’m a big stupid dummy who doesn’t care about my company.”

As cringe as “wave function collapse” and “uncanny valley” are for the blatant misuse here, “alignment” is just another rich asshole meme right now. “Alighnment” is just a fancy way to say “agreeing with me.” They want employees “aligned” with their “vision” aka indulging every stupid whim without pushback. They want AI to be “aligned” too and are extremely frightened that because they understand absolutely nothing about computers, there’s a possibility a machine might NOT do whatever stupid thing they say, so every software needs backdoors and “alignment” to ensure the CEO always has a way to force their will.

WFC appears to just mean concepts that he doesn’t understand or doesn’t know about. He’s mistaken the idea that things can be in a complex mix of states for “things haven’t gone my way yet, or I don’t know what I want.” Uncanny Valley he appears to think just means “when I’m uncomfortable and not getting my way.” He, of course, mixes his metaphors and starts talking about “collapsing the valley” which is not a thing.

Fucking moron.

while you appear to be directionally correct with your observations, are you aware where you’re posting and who this sub is about?

because “just another rich asshole meme” is, unfortunately, not all this is. it might be that as well, but it is also something else.

also, if you didn’t know what this was about, and my comment makes you find out: consadulations and welcome to the club

Ayn Rand and Gene Ray would agree, at least with the principle.