cross-posted from: https://lemmy.world/post/31184706

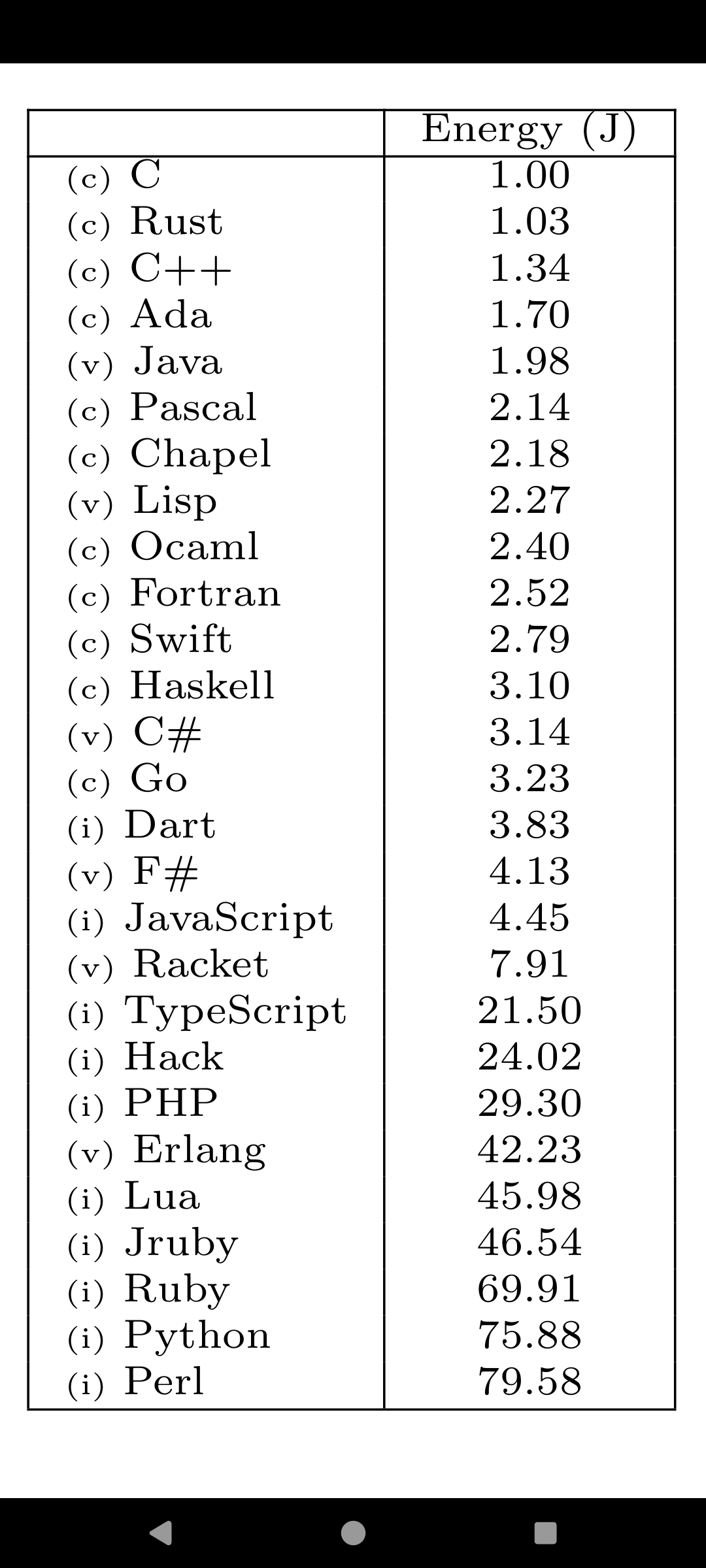

C is one of the top languages in terms of speed, memory and energy

https://www.threads.com/@engineerscodex/post/C9_R-uhvGbv?hl=en

Machine energy, definitely not programmer energy ;)

I would argue that because C is so hard to program in, even the claim to machine efficiency is arguable. Yes, if you have infinite time for implementation, then C is among the most efficient, but then the same applies to C++, Rust and Zig too, because with infinite time any artificial hurdle can be cleared by the programmer.

In practice however, programmers have limited time. That means they need to use the tools of the language to save themselves time. Languages with higher levels of abstraction make it easier, not harder, to reach high performance, assuming the abstractions don’t provide too much overhead. C++, Rust and Zig all apply in this domain.

An example is the situation where you need a hash map or B-Tree map to implement efficient lookups. The languages with higher abstraction give you reusable, high performance options. The C programmer will need to either roll his own, which may not be an option if time Is limited, or choose a lower-performance alternative.

I understand your point but come on, basic stuff has been implemented in a thousand libraries. There you go, a macro implementation

I’m not saying you can’t, but it’s a lot more work to use such solutions, to say nothing about their quality compared to std solutions in other languages.

And it’s also just one example. If we bring multi-threading into it, we’re opening another can of worms where C doesn’t particularly shine.

Not sure I understand your comment on multithreading. pthreads are not very hard to use, and you have stuff like OpenMP if you want some abstraction. What about C is not ideal for multithreading?

It’s that the compiler doesn’t help you with preventing race conditions. This makes some problems so hard to solve in C that C programmers simply stay away from attempting it, because they fear the complexity involved.

It’s a variation of the same theme: Maybe a C programmer could do it too, given infinite time and skill. But in practice it’s often not feasible.

And how testable is that solution? Sure macros are helpful but testing and debugging them is a mess

You mean whether the library itself is testable? I have no idea, I didn’t write it, it’s stable and out there for years.

Whether the program is testable? Why wouldn’t it be. I could debug it just fine. Of course it’s not as easy as Go or Python but let’s not pretend it’s some arcane dark art

Yes I mean mocking, faking, et. al. Not this particular library but macros in general

This doesn’t account for all the comfort food the programmer will have to consume in order to keep themselves sane

For those who don’t want to open threads, it’s a link to a paper on energy efficiency of programming languages.

Results

Also the difference between TS and JS doesn’t make sense at first glance. 🤷♂️ I guess I need to read the research.

My first thought is perhaps the TS is not targeting ESNext so they’re getting hit with polyfills or something

It does, the “compiler” adds a bunch of extra garbage for extra safety that really does have an impact.

I thought the idea of TS is that it strongly types everything so that the JS interpreter doesn’t waste all of its time trying to figure out the best way to store a variable in RAM.

TS is compiled to JS, so the JS interpreter isn’t privy to the type information. TS is basically a robust static analysis tool

The code is ultimately ran in a JS interpreter. AFAIK TS transpiles into JS, there’s no TS specific interpreter. But such a huge difference is unexpected to me.

Its really not, have you noticed how an enum is transpiled? you end up with a function… a lot of other things follow the same pattern.

Nope, have not noticed because I hate JavaScript with a passion. Thanks for educating me.

No they don’t. Enums are actually unique in being the only Typescript feature that requires code gen, and they consider that to have been a mistake.

In any case that’s not the cause of the difference here.

Care to elaborate?

Here’s a good example: https://stackoverflow.com/questions/47363996/why-does-an-enum-transpile-into-a-function

That is not a good example. That is an immediate function call happening once when the program starts and certainly does not have a large impact like you are suggesting.

Only if you choose a lower language level as the target. Given these results I suspect the researchers had it output JS for something like ES5, meaning a bunch of polyfills for old browsers that they didn’t include in the JS-native implementation…

Not really, because this stuff also happens: https://stackoverflow.com/questions/20278095/enums-in-typescript-what-is-the-javascript-code-doing a function call always has an inpact.

Yeah sure, you found the one notorious TypeScript feature that actually emits code, but a) this feature is recommended against and not used much to my knowledge and, more importantly, b) you cannot tell me that you genuinely believe the use of TypeScript enums – which generate extra function calls for a very limited number of operations – will 5x the energy consumption of the entire program.

This isn’t true, there are other features that “emit code”, that includes: namespaces, decorators and some cases even async / await (when targeting ES5 or ES6).

I guess we can take the overhead of rust considering all the advantages. Go however… can’t even.

Even Haskell is higher on the list than Go, which surprises me a lot

But Go has go faster stripes in the logo! Google wouldn’t make false advertising, would they?

Now we just need a language with flames in the logq

For Lua I think it’s just for the interpreted version, I’ve heard that LuaJIT is amazingly fast (comparable to C++ code), and that’s what for example Löve (game engine) uses, and probably many other projects as well.

I would be interested in how things like MATLAB and octave compare to R and python. But I guess it doesn’t matter as much because the relative time of those being run in a data analysis or research context is probably relatively low compared to production code.

Is there a lot of computation-intensive code being written in pure Python? My impression was that the numpy/pandas/polars etc kind of stuff was powered by languages like fortran, rust and c++.

The popular well crafted ones are, but not all are well crafted.

WASM would be interesting as well, because lots of stuff can be compiled to it to run on the web

Indeed, here’s an example - my climate-system model web-app, written in scala running (mainly) in wasm

(note: that was compiled with scala-js 1.17, they say latest 1.19 does wasm faster, I didn’t yet compare).

[ Edit: note wasm variant only works with most recent browsers, maybe with experimental options set - if not try without ?wasm ]I have no clue what I am looking at but it is absolutely mesmerizing.

Oh, it’s designed for a big desktop screen, although it just happens to work on mobile devices too - their compute power is enough, but to understand the interactions of complex systems, we need space.

Every time I get surprised by the efficiency of Lisp! I guess they mean Common Lisp there, not Clojure or any modern dialect.

Yeah every time I see this chart I think “unless it’s performance critical, realtime, or embedded, why would I use anything else?” It’s very flexible, a joy to use, amazing interactive shell(s). Paren navigation is awesome. The build/tooling is not the best, but it is manageable.

That said, OCaml is nice too.

Looking at the Energy/Time ratios (lower is better) on page 15 is also interesting, it gives an idea of how “power hungry per CPU cycle” each language might be. Python’s very high

For Haskell to land that low on the list tells me they either couldn’t find a good Haskell programmer and/or weren’t using GHC.

Wonder what they used for the JS state since it’s dependent on the runtime.

I have a hard time believing Java is that high up. I’d place it around c#.

Why?

(A super slimmed down flavour of) Java runs on fucking simcards.

In theory Java is very similar to C#, an IL based JIT runtime with a GC, of course. So where is the difference coming from between the two? How is it better than pascal, a complied language? These are the questions I’m wondering about.

deleted by creator

I’m using the fattest of java (Kotlin) on the fattest of frameworks (Spring boot) and it is still decently fast on a 5 year old raspberry pi. I can hit precise 50 μs timings with it.

Imagine doing it in fat python (as opposed to micropython) like all the hip kids.

And it powers a lot of phones. People generally don’t like it when their phone needs to charge all the freaking time.

I ran Linux with KDE on my phone for a while and it for sure needed EVEN MORE charging all the time even though most of the system is C, with a sprinkle of C++ and QT.

But that is probably due to other inefficiencies and lack of optimization (which is fine, make it work first, optimize later)

Yeah, and Android has had some 16 years of “optimize later”. I have some very very limited experience with writing mobile apps and while I found it to be a PITA, there is clearly a lot of thought given to how to not eat all the battery and die in the ecosystem there. I would expect that kind of work to also be done at the JVM level.

If Windows Mobile had succeeded, C# likely would’ve been lower as well, just because there’d be more incentive to make a battery charge last longer.

C# has been very optimized since .NET Core (now .NET). Also jit compiler and everything around it.

deleted by creator

Love the “I reject your empirical data and substitute my emotions” energy.

deleted by creator

That definitely raised an eyebrow for me. Admittedly I haven’t looked in a while but I thought I remembered perl being much more performant than ruby and python

etalon

/ˈɛtəlɒn/

noun Physics

noun: etalon; plural noun: etalonsa device consisting of two reflecting glass plates, employed for measuring small differences in the wavelength of light using the interference it produces.

I don’t see how that word makes sense in that phrase

deleted by creator

Does the paper take into account the energy required to compile the code, the complexity of debugging and thus the required re-compilations after making small changes? Because IMHO that should all be part of the equation.

It’s a good question, but I think the amount of time spent compiling a language is going to be pretty tiny compared to the amount of time the application is running.

Still - “energy efficiency” may be the worst metric to use when choosing a language.

Energy efficiency strongly correlates to datacentre costs.

And battery costs, including charging time, for a lot of devices. Users generally aren’t happy with devices that run out of juice all the time.

They compile each benchmark solution as needed, following the CLBG guidelines, but they do not measure or report the energy consumed during the compilation step.

Time to write our own paper with regex and compiler flags.

and in most cases that’s not good enough to justify choosing c

I wouldn’t justify using any language based on this metric alone.

For raw computation, yes. Most programs aren’t raw computation. They run in and out of memory a lot, or are tapping their feet while waiting 2ms for the SSD to get back to them. When we do have raw computation, it tends to be passed off to a C library, anyway, or else something that runs on a GPU.

We’re not going to significantly reduce datacenter energy use just by rewriting everything in C.

We’re not going to significantly reduce datacenter energy use just by rewriting everything in C.

We would however introduce a lot of bugs in the critical systems

Ah this ancient nonsense. Typescript and JavaScript get different results!

It’s all based on

https://en.wikipedia.org/wiki/The_Computer_Language_Benchmarks_Game

Microbenchmarks which are heavily gamed. Though in fairness the overall results are fairly reasonable.

Still I don’t think this “energy efficiency” result is worth talking about. Faster languages are more energy efficient. Who new?

Edit: this also has some hilarious visualisation WTFs - using dendograms for performance figures (figures 4-6)! Why on earth do figures 7-12 include line graphs?

Typescript and JavaScript get different results!

It does make sense, if you skim through the research paper (page 11). They aren’t using

performance.now()or whatever the state-of-the-art in JS currently is. Their measurements include invocation of the interpreter. And parsing TS involves bigger overhead than parsing JS.I assume (didn’t read the whole paper, honestly DGAF) they don’t do that with compiled languages, because there’s no way the gap between compiling C and Rust or C++ is that small.

Their measurements include invocation of the interpreter. And parsing TS involves bigger overhead than parsing JS.

But TS is compiled to JS so it’s the same interpreter in both cases. If they’re including the time for

tscin their benchmark then that’s an even bigger WTF.

Microbenchmarks which are heavily gamed

Which benchmarks aren’t?

Private or obscure ones I guess.

Real-world (macro) benchmarks are at least harder to game, e.g. how long does it take to launch chrome and open Gmail? That’s actually a useful task so if you speed it up, great!

Also these benchmarks are particularly easy to game because it’s the actual benchmark itself that gets gamed (i.e. the code for each language); not the thing you are trying to measure with the benchmark (the compilers). Usually the benchmark is fixed and it’s the targets that contort themselves to it, which is at least a little harder.

For example some of the benchmarks for language X literally just call into C libraries to do the work.

Private or obscure ones I guess.

Private and obscure benchmarks are very often gamed by the benchmarkers. It’s very difficult to design a fair benchmark (e.g chrome can be optimized to load Gmail for obvious reasons. maybe we should choose a more fair website when comparing browsers? but which? how can we know that neither browser has optimizations specific for page X?). Obscure benchmarks are useless because we don’t know if they measure the same thing. Private benchmarks are definitely fun but only useful to the author.

If a benchmark is well established you can be sure everyone is trying to game it.

I just learned about Zig, an effort to make a better C compatible language. It’s been really good so far, I definitely recommend checking it out! It’s early stages for the community, but the core language is pretty developed and is a breath of fresh air compared to C.

Your link links to facebook that links to https://haslab.github.io/SAFER/scp21.pdf

Written in 2021 and not including julia is weird imo. I’m not saying it’s faster but one should include it in a comparison.

And they used bit.ly on page 5 for references.

Haven’t read it yet, but already seems very non-serious to me.

I also didn’t read it. There’s lots of good comparisons already

To run perhaps. But what about the same metrics for debugging? How many hours do we spend debugging c/c++ issues?

True but it’s also a cock to write in

What if we make a new language that extends it and makes it fun to write? What if we call it c+=1?

If you want top speed, Fortran is faster than C.