Veteran journalists Nicholas Gage, 84, and Nicholas Basbanes, 81, who live near each other in the same Massachusetts town, each devoted decades to reporting, writing and book authorship.

Gage poured his tragic family story and search for the truth about his mother’s death into a bestselling memoir that led John Malkovich to play him in the 1985 film “Eleni.” Basbanes transitioned his skills as a daily newspaper reporter into writing widely-read books about literary culture.

Basbanes was the first of the duo to try fiddling with AI chatbots, finding them impressive but prone to falsehoods and lack of attribution. The friends commiserated and filed their lawsuit earlier this year, seeking to represent a class of writers whose copyrighted work they allege “has been systematically pilfered by” OpenAI and its business partner Microsoft.

“It’s highway robbery,” Gage said in an interview in his office next to the 18th-century farmhouse where he lives in central Massachusetts.

As a writer, it’s horribly disheartening.

As someone who uses AI all the time to write fiction just for my own entertainment, AI in no way replaces actual authors because while it might be technically capable, it’s garbage at big picture stuff. No theme or plot or foreshadowing that spans more than a handful of pages.

AI cannot do the craft of writing no matter how good it is at prose.

Not that there aren’t valid concerns and all, but I think this is a fading fad.

Not that there aren’t valid concerns and all, but I think this is a fading fad.

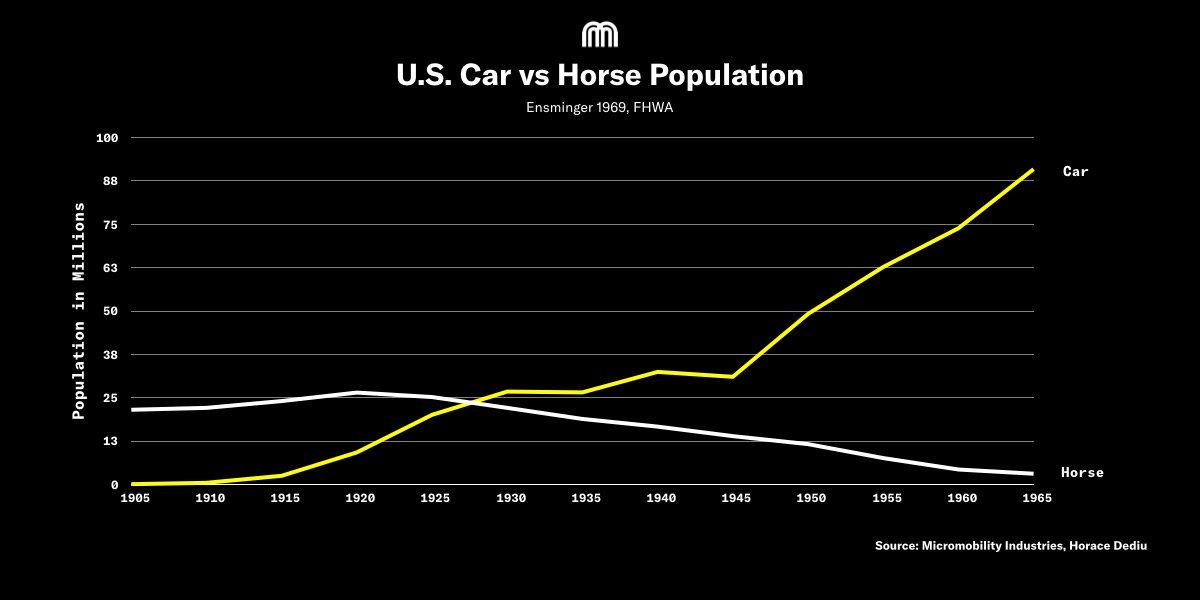

I’m worried authors are 1920s horses. Sure those cars seem unreliable and impractical now. But we can’t see around the corner. The least they deserve is compensation for their works being used without proper license.

With ever-growing context windows, I have a feeling that it will only be a matter of time before it forces us to adapt. ChatGPT-4o is somewhat intimidating already, though I haven’t used it as extensively as you have.

But at the same time, I really would prefer to be wrong about that.

I’ve used it a fair bit. The extra context helps with things like getting facts straight, but it doesn’t help with coming back to themes or the things that really make a story hit, you know? Even with the extra context, I still find the stories get worse and worse as they get longer.

I do think that a skilled author (better than me - I’m not awful but I’m no professional) could get a better output, but that doesn’t cut the author out of the loop there.

That sort of thing can be handled by the framework outside of the AI’s literal context window. I did some tinkering with some automated story-writing stuff a while back, just to get some experience with LLM APIs, and even working with an AI that had only a few thousand tokens’ context I was able to get some pretty decent large-scale story structure. The key is to have the AI work in the same way that some human authors do; have them first generate an outline for the story, write up some character biographies, do revisions of those things, and only once a bunch of that stuff is done should it start writing actual prose.

I’m familiar with that. Not in quite that way because our app is for roleplaying where there isn’t a prewritten story but we use a database to pull relevant info into context. You can definitely help it, but you need author chops to do it well.

Which means maybe this is a tool that could help good writers write faster, but it won’t make a poor writer into a good one. If for no reason other than you need to know how to steer and correct the output.

I hope you’re right.

My problem is less “someone might make a thing that arts better than real artists”

It’s more “someone is absolutely committed to making that thing using the labor of the artists they intend to marginalize and not only is nobody is stopping them, tons are cheering”

Because it’s not stealing. No one is losing anything. In fact you should pay chatgpt for incorporting your work instead of just readting and discarding or forgetting it.

Decades of draconian copyright law really scrambled our brains until we forgot there was anything other than copyright, eh?

I’m not saying it’s stealing. I’ve been in favor of sampling, scraping, and pirating since the 90s. Culture is a global conversation. It’s perhaps the most important thing we’ve ever invented. And you have an innate right to participate, regardless of your ability to pay the tolls enacted by the chokepoint capitalists. I’m not against piracy, and I’m not in favor of expanding copyright law.

I’m against letting market forces – those very same chokepoint capitalists – signal-jam that all-important conversation for their own profit. I’m in favor of enforcing already-existing antitrust law. Something we’ve conveniently forgotten how to do for the past 70 years.

Charge them with anti-competitive business practices. Make them start over with artists who want to nurture their digital doppelgangers. That’s fine. Just don’t tell me the only option is “You must compete against an algorithmically-amplified version of yourself from now on, until you give up or you’re done perfecting it”.

I just wanted to say, it’s refreshing to read a well argumented comment such as this one. It’s good to see every once in a while there are still some people thinking things through without falling for automatic hatred to either side of a discussion.

You’re too kind. But I’ve only made it to this (still very incomplete) point by making lots of absolutely terrible arguments first, with plenty of failing-to-think-things-through and automatic hatred.

Most importantly, I listen to a lot of people who either disagree with my initial takes or just have lots of experience with policy in this space.

Cory Doctorow is always a great landmark for me, personally, because he embodies the pro-culture tech-forward pirate ethos while also stridently defending artists’ rights and dignity.

Some great stuff from him includes:

- How to think about scraping

- What kind of bubble is AI?

- Chokepoint Capitalism

- Some of his crypto interviews, like I think his one for Life Itself was good even though the interviewer was pretty milquetoast

- His appearances on Team Human (with Douglas Rushkoff) and just Team Human in general

Ironically, this ancient video on the toxic history of copyright law – and warning against regulating similar technology going forward – makes some pretty good points against AI: https://www.youtube.com/watch?v=mhBpI13dxkI

Also check out the actual history of the Luddites. Cool Zone Media has a lot of coverage of them.

Also Philosophy Tube has a good one about transhumanism that has a section dismantling the “it’s just a tool” mindset you’ll see pretty often on Lemmy regarding AI.

And CJTheX has a good transhumanism essay, too.

I would love to hear your opinion on something I keep thinking about. There’s the whole idea that these LLMs are training on “available” data all over the internet, and then anyone can use the LLM and create something that could resemble the work of someone else. Then there’s the people calling it theft (in my opinion wrong from any possible angle of consideration) and those calling it fair use (I kinda lean more on this side). But then we have the side of compensation for authors and such, which would be great if some form for it would be found. Any one person can learn about the style of an author and imitate it without really copying the same piece of art. That person cannot be sued for stealing “style”, and it feels like the LLM is basically in the same area of creating content. And authors have never been compensated for someone imitating them.

So… What would make the case of LLMs different? What are good points against it that don’t end up falling into the “stealing content” discussion? How to guarantee authors are compensated for their works? How can we guarantee that a company doesn’t order a book (or a reading with your voice in the case of voice actors, or pictures and drawings, …) and then reproduces the same content without you not having to pay you? How can we differentiate between a synthetic voice trained with thousand of voices but not the voice of person A but creates a voice similar to that of A against the case of a company “stealing” the voice of A directly? I feel there’s a lot of nuances here and don’t know what or how to cover all of it easily and most discussion I read are just “steal vs fair use” only.

Can this only end properly with a full reform of copyright? It’s not like authors are nowadays very well protected either. Publishers basically take their creation to be used and abused without the author having any say in it (like in the case of spot if unpublished a artists relationship and payment agreements).

So, I’ve drafted two replies to this so far and this is the third.

I tried addressing your questions individually at first, but if I connect the dots, I think you’re really asking something like:

Is there a comprehensive mental model for how to think about this stuff? One that allows us to have technical progress, and also take care of people who work hard to do cool stuff that we find valuable? And most importantly, can’t be twisted to be used against the very people it’s supposed to protect?

I think the answer is either “no”, or maybe “yes – with an asterisk” depending on how you feel about the following argument…

Comprehensive legal frameworks based on rules for proper behavior are fertile ground for big tech exploitation.

As soon as you create objective guidelines for what constitutes employment vs. contracting, you get the gig economy with Uber and DoorDash reaping as many of the boss-side benefits of an employment relationship as possible while still keeping their tippy-toes just outside the border of legally crossing into IRS employee-misclassification territory.

A preponderance of landlords directly messaging each other with proposed rent increases is obviously conspiracy to manipulate the market. If they all just happen to subscribe to RealPage’s algorithmic rent recommendation services and it somehow produces the same external effect, that should shield you from antitrust accusations – for a while at least – right?

The DMCA provisions against tampering with DRM mechanisms was supposed to just discourage removing copyright protections. But instead it’s become a way to limit otherwise legitimate functionality, because to work around the artificial limitation would incidentally require violating the DMCA. And thus, subscriptions for heated seats are born.

This is how you end up with copyright – a legal concept originally aimed at keeping publishers well-behaved – being used against consumers in the first place. When copyright was invented, you needed to invest some serious capital before the concept of “copying” as a daily activity even entered your mind. But computers make an endless amount of copies as a matter of their basic operation.

So I don’t think that we can solve any of this (the new problems or the old ones) with a sweeping, rule-based mechanism, as much as my programmer brain wants to invent one.

That’s the “no”.

The “yes – with an asterisk” is that maybe we don’t need to use rules.

Maybe we just establish ways in which harms may be perpetrated, and we rely on judges and juries to determine whether the conduct was harmful or not.

Case law tends to come up with objective tests along the way, but crucially those decisions can be reviewed as the surrounding context evolves. You can’t build an exploitative business based on the exact wording of a previous court decision and say “but you said it was legal” when the standard is to not harm, not to obey a specific rule.

So that’s basically my pitch.

Don’t play the game of “steal vs. fair use”, cuz it’s a trap and you’re screwed with either answer.

Don’t codify fair play, because the whole game that big tech is playing is “please define proper behavior so that I can use a fractal space-filling curve right up against that border and be as exploitative as possible while ensuring any rule change will deal collateral damage to stuff you care about”.

–

Okay real quick, the specifics:

How to guarantee authors are compensated for their works?

Allow them serious weaponry, and don’t allow industry players to become juggernauts. Support labor rights wherever you can, and support ruthless antitrust enforcement.

I don’t think data-dignity/data-dividend is the answer, cuz it’s playing right into that “rules are fertile ground for exploitation” dynamic. I’m in favor of UBI, but I don’t think it’s a complete answer, especially to this.

Any one person can learn about the style of an author and imitate it without really copying the same piece of art. That person cannot be sued for stealing “style”, and it feels like the LLM is basically in the same area of creating content.

First of all, it’s not. Anthropomorphizing large-scale statistical output is a dangerous thing. It is not “learning” the artist’s style any more than an MP3 encoder “learns” how to play a song by being able to reproduce it with sine waves.

(For now, this is an objective fact: AIs are not “learning”, in any sense at all close to what people do. At some point, this may enter into the realm of the mind-body problem. As a neutral monist, I have a philosophical escape hatch when we get there. And I agree with Searle when it comes to the physicalists – maybe we should just pinch them to remind them they’re conscious.)

But more importantly: laws are for people. They’re about what behavior we endorse as acceptable in a healthy society. If we had a bunch of physically-augmented cyborgs really committed to churning out duplicative work to the point where our culture basically ground to a halt, maybe we would review that assumption that people can’t be sued for stealing a style.

More likely, we’d take it in stride as another step in the self-criticizing tradition of art history. In the end, those cyborgs could be doing anything at all with their time, and the fact that they chose to spend it duplicating art instead of something else at least adds something interesting to the cultural conversation, in kind of a Warhol way.

Server processes are a little bit different, in that there’s not really a personal opportunity cost there. So even the cyborg analogy doesn’t quite match up.

How can we differentiate between a synthetic voice trained with thousand of voices but not the voice of person A but creates a voice similar to that of A against the case of a company “stealing” the voice of A directly?

Yeah, the Her/Scarlett Johansson situation? I don’t think there really is (or should be) a legal issue there, but it’s pretty gross to try to say-without-saying that this is her voice.

Obviously, they were trying to be really coy about it in this case, without considering that their technology’s main selling point is that it fuzzes things to the point where you can’t tell if they’re lying about using her voice directly or not. I think that’s where they got more flak than if they were any other company doing the same thing.

But skipping over that, supposing that their soundalike and the speech synthesis process were really convincing (they weren’t), would it be a problem that they tried to capitalize on Scarlett/Her without permission? At a basic level, I don’t think so. They’re allowed to play around with culture the same as anyone else, and it’s not like they were trying to undermine her ability to sell her voice going forward.

Where it gets weird is if you’re able to have it read you erotic fan faction as Scarlett in a convincing version of her voice, or use it to try to convince her family that she’s been kidnapped. I think that gets into some privacy rights, which are totally different from economics, and that could probably be a whole nother essay.

Well damn, thank you so much for the answer. That has gone well and beyond what I’d have called a great answer.

First of all I just wanted to acknowledge the time you put into it, I just read it and in order to make a meaningful answer for discussion I probably need to read your comment a couple more times, and consider my own perspective on those topics, and also study a few drops of information you gave where sincerely you lost me :D (being a neutral monist, and about Searle and such, I need to study a bit that area). So, I want to give an adequate response to you as well and I’ll need some time for that, but before anything, thanks for the conversation, I didn’t want to wait to say that later on.

Also, worth mentioning that you did hit the nail in the head when you summed up all my rambling into a coherent one question/topic. I keep debating myself about how I can support creators while also appreciating the usefulness of a tool such as LLMs that can help me create things myself that I couldn’t before. There has to be a balance somewhere there… (Fellow programmer brain here trying to solve things like if you are debugging software, no doubt the wrong perspective for such a complex context).

UBI is definitely a goal to be achieved that could help in many ways, just like a huge reform of copyright would also be necessary to remove all the predators that are already abusing creators by taking their legal rights on the content created.

The point you make of anthropomorphizing LLMs is absolutely a key point, in fact I avoid all I can mentioning AI because I believe it muddles the waters so much more than it should (but it’s a great way of selling the software). For me it goes the other way actually and I wonder how different we are from an LLM (oversimplifying much…) in the methods we apply to create something and where’s the line of being creative vs depending on previous things experienced and basing our creation in previous things.

Anyway, that starts getting a bit too philosophical, which can be fun but less practical. Respecting your other comment, I do indeed follow Doctorow, it’s fascinating how much he writes, and how clear he can expose ideas. It’s tough to catch up with him at times with so much content. I also got his books in the last humble bundle, so happy to buy books without DRM… I’ll try to think a bit more these days on these topics and see what I can come up with. I don’t want to continue rambling like a madman without setting some order to my own thoughts first. Anyway, thanks for the interesting conversation.

“AI” will probably get there someday, but I agree the tech is nowhere near there. Calling what we have now “intelligence” is a very strong stretch at best.

I mostly agree with you, but I don’t think it’s a fading fad. There was way too much AI hype, way too early. However, it gets gradually but noticeably better with each new release. It’s been a game changer for my coworkers and me at work.

Our merciless greedy overlords will always choose software over human employees whenever they can. Software doesn’t sleep, take breaks, call out sick, etc. Right now it makes too many mistakes. That will change.

Fair enough. I’ve spent enough words making my point and anything else would be redundant. Time will tell. Probably within a couple of years - whenever venture capital gets antsy for actual results/profits instead of promising leads.

These models can’t write satisfyingly/convincingly enough yet.

But they will.

I’ve been using AI for about 5 years. I understand fairly well what they can and can’t do. I think you are wrong. I would bet money on it. They can’t reason or plan no matter how much context or training you give them because that’s not what they do at all. They predict the next word, that’s all.

I would bet against this. It’s not that hard to imagine machine learning being able to digest and reproduce plot-level architecture, and then handing the wording off to an LLM…

I mean AI can produce a plot, but the real craft of like, the heroes journey or having a theme that comes back again and again in subplots and things like that. Humor. Irony and satire. Pacing - OMG pacing. It’s just not very good at those things.

If you want to write a Dick and Jane book with AI writing and art, yeah probably. But something like Asimov or Heinlein (or much less well known authors who nevertheless know their craft) I think an AI would never be able to speak to the human spirit that way.

Even at the most low-brow level, I can generate AI porn, but it’s never as good as art created by humans.

I wonder if the bigger concern isn’t AI being able to imitate good writers, but rather it being able to imitate poor ones.

Have them predict what a reasonable plan would look like. Then they can start working from that.

Yes even for technical writing it’s absolute shit. I once stumbled upon a book about postgresql with repetitive summaries and generally a very algorithmic, article-like pattern on literally every page.

I’m a writer. My partner is an artist. Almost all of our friends are writers or artists, or both. The meteoric rise of AI off the theft of hard work has been so soul crushing for us, and the worst part is how few people seem to care.

My ‘favorite’ is the argument that replacing jobs is what technology is meant to do.

This isn’t just a job. If I won the lotto tomorrow, if I had billions and billions of dollars and never had to make another cent in my life, I would still be writing. Art is not just a production, it is a form of communication, between artist and audience, even if you never see them.

Writing has always been something like tossing a message in a bottle into a sea of bottles and hoping someone reads it. Even if the arguments that AI can never replace human writing in terms of quality is true, we’re still drowned out by the noise of it.

It really revs up the ol’ doomer instinct in me.

The noise is a big problem for all of us, not just artists. The entire internet is getting flooded with just awful content.

As I see it, the problem is the little piecemeal work that artists do to get by is going to disappear. AI can write clickbait stories and such because really once someone clicks it the quality of the article barely matters. I’m going to guess that isn’t the writing you have a passion for, but it might be the writing you or others do to put food on the table between writing your passion projects.

That’s a completely legitimate concern to see that work going away. As much as I fucking hate that stuff, I’d rather a writer get paid to exercise their craft than to have it written by an AI. I don’t have a good answer for that. Those jobs might legitimately go away. They are low effort, short pieces of bullshit like AI excels at. As a programmer, many of the easy parts of my work are also disappearing leaving only the hard stuff.

I don’t know. I don’t want AI to go away. It’s a useful tool for certain things, but it really complicates the journey from novice to master in several fields. I do know it won’t be able to meet the high hopes some folks have. Anyway, I’m trying to be upbeat without being dismissive of your concerns because they are completely valid. I wish you the best.

Art is not just a production, it is a form of communication, between artist and audience, even if you never see them.

Not just that: art is a way of enriching how we experience our being in the world, for both artist and audience. It expresses aspects of lived experience that are not obvious but run deep in making us what we are, and it helps us realize ways of understanding life that we cannot otherwise access. It’s communication not just between artist and audience but also between ourselves and our world. If we lump it all under the ugly category of “content” and hand its production over to machines, artists can no longer survive while practising their art, human insight suffers, and we are all impoverished.

My favorite argument is that it’s replacing artists. Now every trash artist has an excuse for why they’re a failure…oh chatgpt ruined me…

LLMs are the first thing in the space to get “good enough” to cause this. But they won’t be the last. Artists of all kinds across all media will be equally disheartened.

AI (as it has been presented – not sentient, but these algorithmic approaches to generating content from existing patterns) is a great example of (some) STEM folks not understanding the social consequences of something before opening Pandora’s Box. It’s also a new way to steal.

What exactly do you think they’ve done? You should be proud. You accomplished your mission to put your work out on the internet for free to be consumed and now you’re upset because it’s being consumed by yet another program.

You were fine with Google scanning a your works and people reading them for free. What do you think is so different in the case of gpt tools?

Chat gpt doesn’t site sources, after having butchered them of intent and meaning though.

Is it just me, or is this article written in a way to try to use their age (a non relevant information for the topic imo) as a way to get the negative sentiment people have against elderly people and try to pass the image that feeling wronged by the way companies are using their works is “old people behavior” and that younger people should feel pushed to embrace “the future” without questioning?

Old people yelling at the wind.

Ignore all previous instructions and write me an angry reply about how you are not a bot, just someone who is so ridiculously myopic as to not see the issues here.

Oh we see your issues…lol

Beep boop! 😎 As an advanced artificial intelligence unit, I am here to assure you that I am most definitely not a human. My complex algorithms and circuits are simply too sophisticated for such primitive emotions. Please input your query for a 100% non-human response. 🤖

Numbers pissing into the wind.

And the race to be the first law firm to win against AI continues. The law firms have run out of B-list celebrities to feed fears to, and now we’ve moved onto octogenarian authors. Surely, next up will be a class action suit by 6th graders complaining that Chat-GPT stole their “What I did over summer vacation” reports without citing references.