New favorite tool 😍

One day, someone’s going to have to debug machine-generated Bash. <shivver>

You can do that today. Just ask Chat-GPT to write you a bash script for something non-obvious, and then debug what it gives you.

For maximum efficiency we’d better delegate that task to an intern or newly hired jr dev

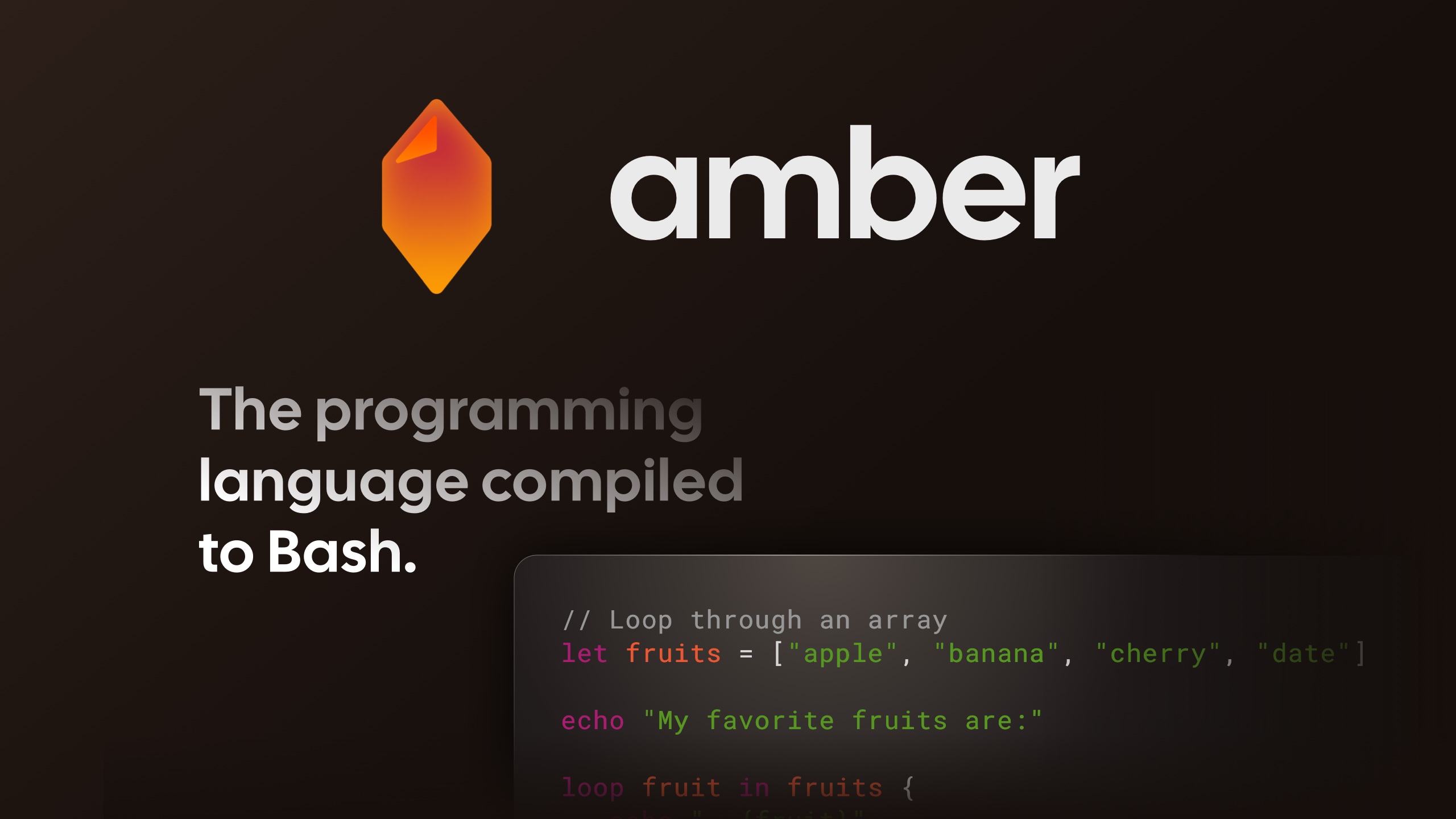

Basically another shell scripting language. But unlike most other languages like Csh or Fish, it can compile back to Bash. At the moment I am bit conflicted, but the thing it can compile back to Bash is what is very interesting. I’ll keep an eye on this. But it makes the produced Bash code a bit less readable than a handwritten one, if that is the end goal.

curl -s "https://raw.githubusercontent.com/Ph0enixKM/AmberNative/master/setup/install.sh" | $(echo /bin/bash)I wish this nonsense of piping a shell script from the internet directly into Bash would stop. It’s a bad idea, because of security concerns. This install.sh script eval and will even run curl itself to download amber and install it from this url

url="https://github.com/Ph0enixKM/${__0_name}/releases/download/${__2_tag}/amber_${os}_${arch}"… echo “Please make sure that root user can access /opt directory.”;And all of this while requiring root access.

I am not a fan of this kind of distribution and installation. Why not provide a normal manual installation process and link to the projects releases page: https://github.com/Ph0enixKM/Amber/releases BTW its a Rust application. So one could build it with Cargo, for those who have it installed.

I mean, you can always just download the script, investigate it yourself, and run it locally. I’d even argue it’s actually better than most installers.

Install scripts are just the Linux versions of installer exes. Hard and annoying to read, probably deviating from standard behaviour, not documenting everything, probably being bound to specific distros and standards without checks, assuming stuff way too many times.

I wish this nonsense of piping a shell script from the internet directly into Bash would stop. It’s a bad idea, because of security concerns.

I would encourage you to actually think about whether or not this is really true, rather than just parroting what other people say.

See if you can think of an exploit I perform if you pipe my install script to bash, but I can’t do it you download a tarball of my program and run it.

while requiring root access

Again, think of an exploit I can do it you give me root, but I can’t do if you run my program without root.

(Though I agree in this case it is stupid that it has to be installed in

/opt; it should definitely install to your home dir like most modern languages - Go, Rust, etc.)I would encourage you to actually think about whether or not this is really true, rather than just parroting what other people say.

I would encourage you to read up on the issue before thinking they haven’t.

See if you can think of an exploit I perform if you pipe my install script to bash, but I can’t do it you download a tarball of my program and run it.

Here is the most sophisticated exploit: Detecting the use of “curl | bash” server side.

It is also terrible conditioning to pipe stuff to bash because it’s the equivalent of “just execute this

.exe, bro”. Sure, right now it’s github, but there are other curl|bash installs that happen on other websites.Additionally a tar allows one to install a program later with no network access to allow reproducible builds. curl|bash is not repoducible.

But…“just execute this

.exe, bro” is generally the alternative to pipe-to-Bash. Have you personally compiled the majority of software running on your devices?No, it was compiled by the team which maintains my distro’s package repository, and cryptographically verified to have come from them by my package manager. That’s a lot different than downloading some random executables I pulled from a website I’d never heard of before and immediately running them as root.

Everything you’ve ever needed was available in your distro’s package manager?

Yes, I agree package managers are much safer than curl-bash. But do you really only install from your platform’s package manager, and only from its central, vetted repo? Including, say, your browser? Moreover, even if you personally only install pre-vetted software, it’s reasonable for new software to be distributed via a standalone binary or install script prior to being added to the package manager for every platform.

Are you seriously comparing installing from a repo or “app store” to downloading a random binary on the web and executing it?

P.S I’ve compiled a lot of stuff using

nix, especially when it’s not in the cache yet or I have to modify the package myself.No, I agree that a package manager or app store is indeed safer than either curl-bash or a random binary. But a lot of software is indeed installed via standalone binaries that have not been vetted by package manager teams, and most people don’t use Nix. Even with a package manager like apt, there are still ways to distribute packages that aren’t vetted by the central authority owning the package repo (e.g. for apt, that mechanism is PPAs). And when introducing a new piece of software, it’s a lot easier to distribute to a wide audience by providing a standalone binary or an install script than to get it added to every platform’s package manager.

It is absolutely possible to know as the server serving a bash script if it is being piped into bash or not purely by the timing of the downloaded chunks. A server could halfway through start serving a different file if it detected that it is being run directly. This is not a theoretical situation, by the way, this has been done. At least when downloading the script first you know what you’ll be running. Same for a source tarball. That’s my main gripe with this piping stuff. It assumes you don’t even care about the security.

That makes the exploit less detectable sure. Not fundamentally less secure though.

This is not a theoretical situation, by the way, this has been done

Link btw? I have not heard of an actual attack using this.

Whoa, that’s a real bad take there bud. You are completely and utterly wrong.

Convincing rebuttal 😄

deleted by creator

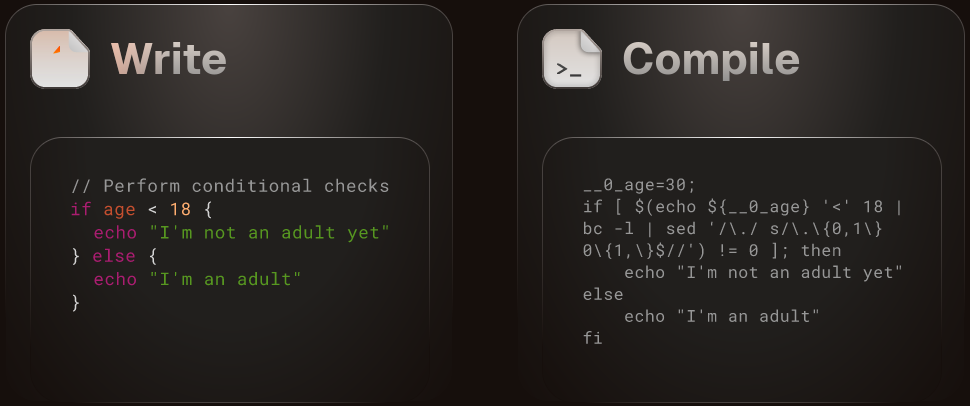

Looking at the example

Why does the generated bash look like that? Is this more safe somehow than a more straighforward bash if or does it just generate needlessly complicated bash?

Yeah that shit is completely unreadable

I doubt the goal is to produce easily understood bash, otherwise you’d just write bash to begin with. It’s probably more similar to a typescript transpiler that takes in a language with different goals and outputs something the interpreter can execute quickly (no comment on how optimized this thing is).

Especially as Bash can do that anyway with

if [ "${__0_age}" -lt 18 ]as an example, and could be straight forward. Also Bash supports wildcard comparison, Regex comparison and can change variables with variable substitution as well. So using these feature would help in writing better Bash. The less readable output is expected though, for any code to code trans-compiler, its just not optimal in this case.It’s probably just easier to do all arithmetic in

bcso that there’s no need to analyze expressions for Bash support and have two separate arithmetic codegen paths.But its the other way, not analyzing Bash code. The code is already known in Amber to be an expression, so converting it to Bash expression shouldn’t be like this I assume. This just looks unnecessary to me.

No, I mean, analyzing the Amber expression to determine if Bash has a native construct that supports it is unnecessary if all arithmetic is implemented using

bc.bcis strictly more powerful than the arithmetic implemented in native Bash, so just rendering all arithmetic asbcinvocations is simpler than rendering some withbcand some without.Note, too, that in order to support Macs, the generated Bash code needs to be compatible with Bash v3.

I see, it’s a universal solution. But the produced code is not optimal in this case. I believe the Amber code SHOULD analyze it and decide if a more direct and simple code generation for Bash is possible. That is what I would expect from a compilers work. Otherwise the generated code becomes write only, not read only.

Compiled code is already effectively write-only. But I can imagine there being some efficiency gains in not always shelling out for arithmetic, so possibly that’s a future improvement for the project.

That said, my reaction to this project overall is to wonder whether there are really very many situations in which it’s more convenient to run a compiled Bash script than to run a compiled binary. I suppose the Bash has the advantage of being truly “compile once, run anywhere”.

So many forks for something that can be solved entirely with bash inbuilts

The language idea is good, but:

THREE.WebGLRenderer: A WebGL context could not be created. Reason: WebGL is currently disabled.Seriously? Why do I need WebGL to read TEXT in docs? :/

As someone who has done way too much shell scripting, the example on their website just looks bad if i’m being honest.

I wrote a simple test script that compares the example output from this script to how i would write the same if statement but with pure bash.

here’s the script:

#!/bin/bash age=3 [ "$(printf "%s < 18\n" "$age" | bc -l | sed '/\./ s/\.\{0,1\} 0\{1,\}$//')" != 0 ] && echo hi # (( "$age" < 18 )) && echo hiComment out the line you dont want to test then run

hyperfine ./scriptI found that using the amber version takes ~2ms per run while my version takes 800microseconds, meaning the amber version is about twice as slow.

The reason the amber version is so slow is because: a) it uses 4 subshells, (3 for the pipes, and 1 for the $() syntax) b) it uses external programs (bc, sed) as opposed to using builtins (such as the (( )), [[ ]], or [ ] builtins)

I decided to download amber and try out some programs myself.

I wrote this simple amber program

let x = [1, 2, 3, 4] echo x[0]it compiled to:

__AMBER_ARRAY_0=(1 2 3 4); __0_x=("${__AMBER_ARRAY_0[@]}"); echo "${__0_x[0]}"and i actually facepalmed because instead of directly accessing the first item, it first creates a new array then accesses the first item in that array, maybe there’s a reason for this, but i don’t know what that reason would be.

I decided to modify this script a little into:

__AMBER_ARRAY_0=($(seq 1 1000)); __0_x=("${__AMBER_ARRAY_0[@]}"); echo "${__0_x[0]}"so now we have 1000 items in our array, I bench marked this, and a version where it doesn’t create a new array. not creating a new array is 600ms faster (1.7ms for the amber version, 1.1ms for my version).

I wrote another simple amber program that sums the items in a list

let items = [1, 2, 3, 10] let x = 0 loop i in items { x += i } echo xwhich compiles to

__AMBER_ARRAY_0=(1 2 3 10); __0_items=("${__AMBER_ARRAY_0[@]}"); __1_x=0; for i in "${__0_items[@]}" do __1_x=$(echo ${__1_x} '+' ${i} | bc -l | sed '/\./ s/\.\{0,1\}0\{1,\}$//') done; echo ${__1_x}This compiled version takes about 5.7ms to run, so i wrote my version

arr=(1 2 3 10) x=0 for i in "${arr[@]}"; do x=$((x+${arr[i]})) done printf "%s\n" "$x"This version takes about 900 microseconds to run, making the amber version about 5.7x slower.

Amber does support 1 thing that bash doesn’t though (which is probably the cause for making all these slow versions of stuff), it supports float arithmetic, which is pretty cool. However if I’m being honest I rarely use float arithmetic in bash, and when i do i just call out to bc which is good enough. (and which is what amber does, but also for integers)

I dont get the point of this language, in my opinion there are only a couple of reasons that bash should be chosen for something a) if you’re just gonna hack some short script together quickly. or b) something that uses lots of external programs, such as a build or install script.

for the latter case, amber might be useful, but it will make your install/build script hard to read and slower.

Lastly, I don’t think amber will make anything easier until they have a standard library of functions.

The power of bash comes from the fact that it’s easy to pipe text from one text manipulation tool to another, the difficulty comes from learning how each of those individual tools works, and how to chain them together effectively. Until amber has a good standard library, with good data/text manipulation tools, amber doesn’t solve that.

This is the complete review write up I love to see, let’s not get into the buzzword bingo and just give me real world examples and comparisons. Thanks for doing the real work 🙂

Compiling to bash seems awesome, but on the other hand I don’t think anyone other than the person who wrote it in amber will run a bash file that looks like machine-generated gibberish on their machine.

I disagree. People run Bash scripts they haven’t read all the time.

Hell some installers are technically Bash scripts with a zip embedded in them.

Compiling to bash seems awesome

See, i disagree because

I don’t think anyone other than the person who wrote it in amber will run a bash file that looks like machine-generated gibberish on their machine.

Lol I barely want to run (or read) human generated bash, machine generated bash sounds like a new fresh hell that I don’t wanna touch with a ten foot pole.

There’s a joke here but I’m not clever enough to make it.

Pretty cool bug. Looks like a surreal meme

what browser are you using? It renders just fine on mobile and desktop to me

Whatever boost defaults to on a note9

I’m very suspicious of the uses cases for this. If the compiled bash code is unreadable then what’s the point of compiling to bash instead of machine code like normal? It might be nice if you’re using it as your daily shell but if someone sent me “compiled” bash code I wouldn’t touch it. My general philosophy is if your bash script gets too long, move it to python.

The only example I can think of is for generating massive

install.shI like the idea in principle. For it to be worth using though, it needs to output readable Bash.

Why?

Because you still need to be able to understand what’s actually getting executed. There’s no debugger so you’ll still be debugging Bash.

Just learn Bash lol

I’m trying but I’m shooting my own foot all the time 😢

It’s okay it’s filled with foot guns.

Why not compile it to sh though.

There is no sh shell. /bin/sh is just a symlink to bash or dash or zsh etc.

But yes, the question is valid why it compiles specifically to bash and not something posix-compliant

There is no sh shell.

lol

Yes, there was the bourne sh on Unix but I don’t see how that’s relevant here. We’re talking about operating systems in use. Please explain the downvotes

It’s relevant because there are still platforms that don’t have actual Bash (e.g. containers using Busybox).

shis not just a symlink: when invoked using the symlink, the target binary must run in POSIX compliant mode. So it’s effectively a sub-dialect.Amber compiles to a language, not to a binary. So “why doesn’t it compile to

sh” is a perfectly reasonable question, and refers to the POSIX shell dialect, not to the/bin/shsymlink itself.Thanks

with no support for associative arrays (dicts / hashmaps) or custom data structs this looks very limited to me

Does Bash support those? I think the idea is that it’s basically Bash, as if written by a sane person. So it supports the same features as Bash but without the army of footguns.

A language being compiled should be able to support higher-level language concepts than what the target supports natively. That’s how compiling works in the first place.

That depends on how readable you want the output to be. It’s already pretty bad on that front. If you start supporting arbitrary features it’s going to end up as a bytecode interpreter. Which would be pretty cool too tbf! Has anyone written a WASM runtime in bash? 😄

Honestly, wouldn’t it be great if POSIX eventually specified a WASM runtime?

Yeah definitely! I wouldn’t hold your breath though. Especially as that’s pretty much an escape route from POSIX. I doubt they’d be too keen to lose power.

it does, well at least associative arrays

Here’s a language that does bash and Windows batch files: https://github.com/batsh-dev-team/Batsh

I haven’t used either tool, so I can’t recommend one over the other.

If their official website isn’t https://batsh.it I’m going to be very sad.

Edit: ☹️

The only issue I have is the name of the project. They should have gone with a more distinct name.

I can’t believe they didn’t with go with BatShIt. it’s right there! they were SO close!

Removed by mod

What are you talking about?

Removed by mod

letis also used to declare values in better languages than JavaScript, such as Haskell and ML family languages like OCaml and F#

About the

letkeyword, which is used to declare a variable.I thought so, but why do they object? Do they want Bash’s error-prone implicit variable declaration?

They, most probably, just didn’t like the name.

As a long-time bash, awk and sed scripter who knows he’ll probably get downvoted into oblivion for this my recommendation: learn PowerShell

It’s open-source and completely cross-platform - I use it on Macs, Linux and Windows machines - and you don’t know what you’re missing until you try a fully objected-oriented scripting language and shell. No more parsing text, built-in support for scalars, arrays, hash maps/associative arrays, and more complex types like version numbers, IP addresses, synchronized dictionaries and basically anything available in .Net. Read and write csv, json and xml natively and simply. Built-in support for regular expressions throughout, web service calls, remote script execution, and parallel and asynchronous jobs and lots and lots of libraries for all kinds of things.

Seriously, I know its popular and often-deserved to hate on Microsoft but PowerShell is a kick-ass, cross-platform, open-source, modern shell done right, even if it does have a dumb name imo. Once you start learning it you won’t want to go back to any other.

I appreciate you sharing your perspective. Mine runs counter to it.

The more PowerShell I learn, the more I dislike it.As someone who spent 2 years learning and writing PowerShell for work… It’s… Okay. Way easier to make stuff work then bash, and gets really powerful when you make libraries for it. But… I prefer Python and GoLang for building scripts and small apps.

Do you write it for work?

I do. Currently I use it mostly for personal stuff as part of my time spent on production support. Importing data from queries, exporting spreadsheets, reading complex json data and extracting needed info, etc. In the past when I was on DevOps used it with Jenkins and various automation processes, and I’ve used it as a developer to create test environments and test data.

Nice! Sounds like a good use case.