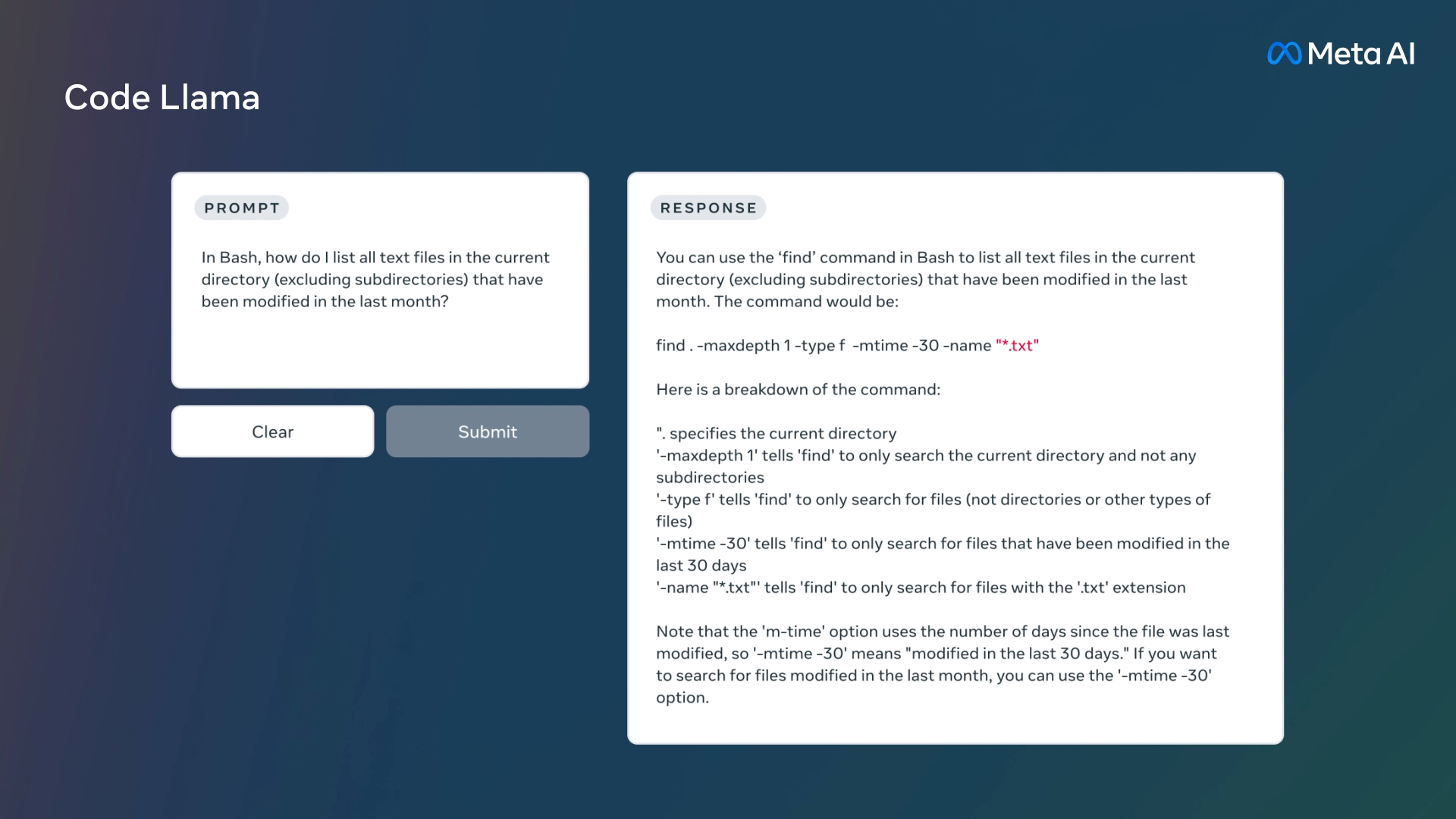

Today, we are releasing Code Llama, a large language model (LLM) that can use text prompts to generate code. Code Llama is state-of-the-art for publicly available LLMs on code tasks, and has the potential to make workflows faster and more efficient for current developers and lower the barrier to entry for people who are learning to code. Code Llama has the potential to be used as a productivity and educational tool to help programmers write more robust, well-documented software.

I’m thoroughly dubious here, especially considering that their own data showed GPT beating them by around 13% on a standard, and GPT being highly unreliable.

I’m also definitely not willing to upload a substantial portion of my code base to a Meta product or service so it can tell me how to use it.

It’s a model release. You run it locally on your computer without sharing back any data.

Helpful, but it still sounds like a solution to a problem I don’t have.

Sounds like a comment about a problem you dont have XD

What?

Let’s hope it gets leaked too

Generally no need for leaks. It seems to be under the same terms as Llama 2.

We tried to ask our interview question of ChatGPT. After some manual syntax fixes, it performed about as well as a mediocre junior developer, i.e. writing mutithreaded code without any synchronization.

Don’t misunderstand, it is an amazing technical achievement that it could output (mostly) correct code to solve a problem, but it is nowhere good enough for me to use. I would have to carefully analyze any code generated for errors, rewrite bits to improve readability (rename variables to match our terminology, add comments, etc), and who knows what else. I am not sure it will save me much time and I am sure it will not be as good as my own code. I could see using an AI to generate sophisticated boiler plate code (code that is long, but logically trivial).