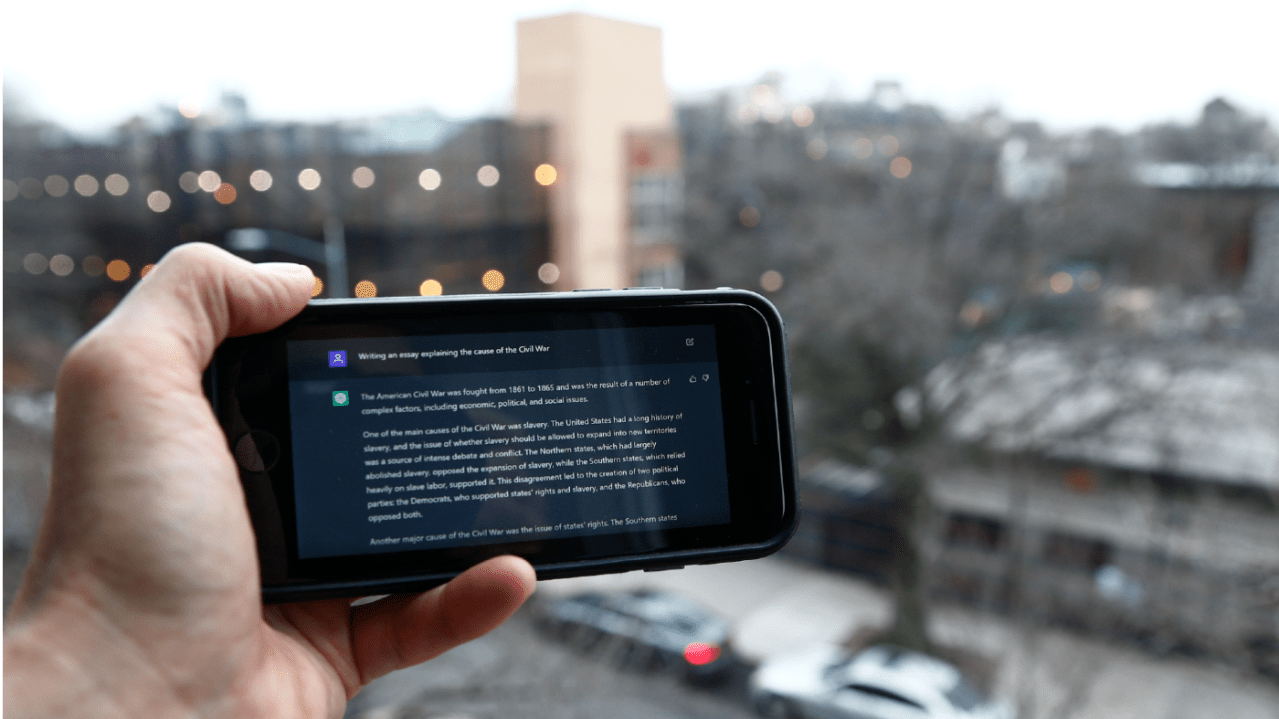

“To prevent disinformation from eroding democratic values worldwide, the U.S. must establish a global watermarking standard for text-based AI-generated content,” writes retired U.S. Army Col. Joe Buccino in an opinion piece for The Hill. While President Biden’s October executive order requires watermarking of AI-derived video and imagery, it offers no watermarking requirement for text-based content. “Text-based AI represents the greatest danger to election misinformation, as it can respond in real-time, creating the illusion of a real-time social media exchange,” writes Buccino. “Chatbots armed with large language models trained with reams of data represent a catastrophic risk to the integrity of elections and democratic norms.”

Joe Buccino is a retired U.S. Army colonel who serves as an A.I. research analyst with the U.S. Department of Defense Defense Innovation Board. He served as U.S. Central Command communications director from 2021 until September 2023. Here’s an excerpt from his report:

Watermarking text-based AI content involves embedding unique, identifiable information – a digital signature documenting the AI model used and the generation date – into the metadata generated text to indicate its artificial origin. Detecting this digital signature requires specialized software, which, when integrated into platforms where AI-generated text is common, enables the automatic identification and flagging of such content. This process gets complicated in instances where AI-generated text is manipulated slightly by the user. For example, a high school student may make minor modifications to a homework essay created through Chat-GPT4. These modifications may drop the digital signature from the document. However, that kind of scenario is not of great concern in the most troubling cases, where chatbots are let loose in massive numbers to accomplish their programmed tasks. Disinformation campaigns require such a large volume of them that it is no longer feasible to modify their output once released.

The U.S. should create a standard digital signature for text, then partner with the EU and China to lead the world in adopting this standard. Once such a global standard is established, the next step will follow – social media platforms adopting the metadata recognition software and publicly flagging AI-generated text. Social media giants are sure to respond to international pressure on this issue. The call for a global watermarking standard must navigate diverse international perspectives and regulatory frameworks. A global standard for watermarking AI-generated text ahead of 2024’s elections is ambitious – an undertaking that encompasses diplomatic and legislative complexities as well as technical challenges. A foundational step would involve the U.S. publicly accepting and advocating for a standard of marking and detection. This must be followed by a global campaign to raise awareness about the implications of AI-generated disinformation, involving educational initiatives and collaborations with the giant tech companies and social media platforms.

In 2024, generative AI and democratic elections are set to collide. Establishing a global watermarking standard for text-based generative AI content represents a commitment to upholding the integrity of democratic institutions. The U.S. has the opportunity to lead this initiative, setting a precedent for responsible AI use worldwide. The successful implementation of such a standard, coupled with the adoption of detection technologies by social media platforms, would represent a significant stride towards preserving the authenticity and trustworthiness of democratic norms.

Exerp credit: https://slashdot.org/story/423285

So what happens when we can’t trust everything we read on the Internet anymore?

Spoiler alert: we’ve never been able to trust everything we read on the internet.

In relative terms we could.

The amount of disinformation and propaganda is about to become obscene.

Except, no, you can’t. The whole “you eat seven spiders at night a year” was a rumor created specifically to show how easy is to start rumors. And how many times has that little gem been floating around the internet? Or how about how often you hear experts say that people talking about their given field on the Internet are flat out wrong, but they sound charismatic, so they get the upvote?

The Internet is full of DATA. It’s always been up to you to parse that info and decide what’s credible and what’s not. The difference now is that the critical thinking required to even access the Internet is basically nil and now everyone is on there.

I guess you don’t know what’s coming. Is there a lot of misinformation now? Certainly. But I’d say less than half the data is false.

In the coming months you’re going to start seeing social media taken over by AI. You’re going to see pointed political “opinions” followed by several comments agreeing with the point being pushed. These are going to outnumber human comments.

Currently, shills absolutely exist, but they’re far outnumbered by genuine people. That’s about to change. Money is going to buy public opinion on a whole new scale unless we learn to ignore anonymous social media.

If you think that doesn’t already exist, you’ve been living under a rock. The Dead Internet Theory is pretty old at this point. I’m not saying you’re wrong, I’m saying that some of us have seen this trend coming long before AI was a buzzword and have been watching it already happen around us. I very much know what is coming because I’ve already watched it happen.

Yeah, I mean 2015 was a big turning point, but this one should be bigger. It’s not black and white.

Exactly, it’s not black and white. It’s gray and grayer. And you’re telling me “it’s gonna be black!” and I’m telling you “it’s already gray, and it’s about to become even grayer”. This isn’t a turning point either. It’s just a predictable progression down a path that we started on decades ago. Some of us have been raising the alarm over this for a very long time. You’re coming to the trenches fresh faced trying to school me and I’m already war torn and fatigued.

It’s not even about trust. It’s that I am confident I will have no clue who is a real life human being anymore soon. Autogenerated images, video, and text is practically in its infancy but already exists in the uncanny valley of being impossible to determine which is real and which is not. Imagine 5 years from now when perfectly lifelike high res video of practically anything you can imagine can be generated on the fly. Essentially the only thing I will have any certainty on is what I can witness in person. Or, if I have a circle of trust I can choose to believe content published by certain organizations or groups.

It may actually push us away from tech and back to the community, which could be good assuming we survive the transition.

Looks pretty good to be a dolphin right now.

The same thing that has been happening for the past 2 decades.

I see that as a great opportunity for journalism.